Brushes and Algorithms: What the Miniature Painting Community Teaches Us About AI in Creative Work

Published:

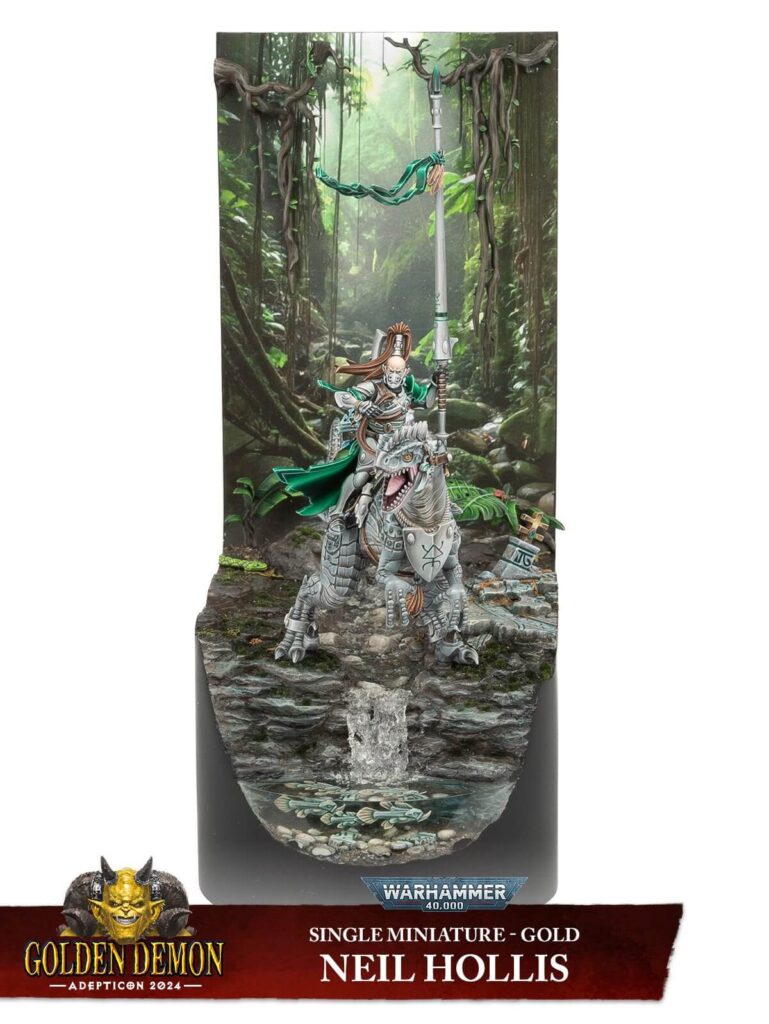

In March 2024, Neil Hollis (@neilpaints on Instagram) stood on stage at Adepticon, holding the Golden Demon award for best single Warhammer 40,000 miniature. His Aeldari Exodite, a dinosaur-riding warrior crafted from converted parts, was undeniably stunning and absolutely deserved the win. The paint job was masterful, the conversion work inspired. Yet within hours, the miniature painting community erupted into fierce debate. The controversy wasn’t about his brushwork or colour choices. It was about the backdrop, a lush prehistoric landscape that Hollis had generated using AI.

By August, Games Workshop had moved decisively, banning all AI-generated elements from future Golden Demon competitions. The message was clear: keep your algorithms out of the painting booth. But this seemingly niche controversy reveals something profound about a challenge facing every engineering leader today. How do we integrate powerful AI tools whilst preserving the human elements that create long-term value?

Neil Hollis’s Golden Demon-winning Aeldari Exodite miniature, showing the masterfully painted figure against the AI-generated forest backdrop that sparked community debate.

Every engineering leader facing AI adoption decisions grapples with this fundamental question. The challenge isn’t technical but rather cultural, developmental, and deeply human. Where do we draw the line between AI as a helpful assistant and AI as a replacement for human thinking, creativity, and growth?

The answer may come from an unexpected source. These miniature painters, among whom I must sheepishly count myself, spend hundreds of hours hand-painting tiny Warhammer figures and collectibles, obsessing over edge highlights and colour theory with the kind of focused intensity that would make any software engineer feel right at home. We’re wrestling with the same AI integration challenges that professional teams face daily, just with smaller brushes and an embarrassing amount of knowledge about Space Marine chapter colour schemes.

Their debates about authenticity, skill development, and the role of technology in professional practice offer surprisingly relevant insights for teams navigating AI adoption. And yes, before you ask, I absolutely have strong opinions about whether Nuln Oil counts as cheating. It doesn’t, by the way. It’s just liquid talent in a pot.

Now, I should probably acknowledge the elephant in the room. Yes, I’m a software engineering consultant writing about miniature painting controversies. If that doesn’t reveal my thoroughly neurodivergent hobby priorities, I don’t know what would. But here’s the thing; after twenty-five years of watching engineering teams grapple with technology adoption, and an embarrassingly similar number of years perfecting my Bad Moons yellow (Averland Sunset, Yriel Yellow, Flash Gitz Yellow, if you must know), the parallels are impossible to ignore.

When a software engineer uses GitHub Copilot to autocomplete boilerplate code, they’re making the same category of decision as a miniature painter using AI to generate reference images for lighting studies. When a team deploys AI to generate test cases, they’re navigating similar territory to painters debating whether AI-generated backdrops belong in competition entries. The underlying tension is identical. How do we leverage AI’s capabilities without undermining the human development that makes sustained technical work possible?

The miniature painting community’s response to AI, from heated controversies to thoughtful integration strategies, provides a compelling case study for any professional team facing similar choices. Their experience reveals frameworks for thinking about AI adoption that preserve human agency whilst embracing technological capability. And if admitting I have three different brushes dedicated solely to edge highlighting doesn’t establish my credibility within this particular niche, nothing will.

The Adepticon Controversy

The Golden Demon controversy illuminates the central tension of our technological moment. There’s a crucial difference between AI as a tool that amplifies human capability versus AI as a substitute for human learning and growth. This distinction matters profoundly for software engineering teams, where the same fundamental question arises daily. When does AI pair programming enhance a developer’s architectural thinking, and when does it create dependency that undermines skill development?

The miniature painting community’s struggle with this question offers a framework for professional teams. The issue isn’t whether AI can produce good results but rather whether its integration preserves or erodes the human development that creates sustainable competitive advantage.

What made the Hollis controversy particularly revealing wasn’t the quality of the AI-generated backdrop, which many acknowledged was visually striking. The fracture emerged around intention and integration. The painting itself demonstrated exceptional skill and creativity, yet the AI element felt to many like it crossed an invisible line between assistance and replacement.

This tension between individual capability and technological augmentation mirrors exactly what engineering teams face when evaluating AI tools. The question isn’t whether AI can improve immediate output but rather whether its integration supports or undermines the development of human expertise that enables long-term innovation and adaptability.

The Authenticity Paradox

The miniature painting community has always embraced technological tools. Airbrushes revolutionised large surface painting. Digital photography changed how painters share and learn techniques. 3D printing transformed customisation possibilities. Each of these innovations expanded what painters could achieve whilst preserving the fundamental human elements of artistic decision-making and skill development.

AI, however, presents a different category of tool entirely. Understanding this distinction reveals crucial insights about AI adoption in professional contexts.

I believe AI has tremendous potential within the hobby. It can help research complex lore backgrounds, suggest colour palettes based on environmental themes, or recommend alternative techniques for achieving specific effects. These applications support and enhance the painter’s creative process without replacing the fundamental craft of mixing colours, controlling brush pressure, or developing an artistic eye. The technology serves the artist rather than supplanting them.

Yet when AI generates finished visual elements, something fundamentally different occurs. This is what we might call the authenticity paradox. Traditional tools extend human capability. The airbrush still requires understanding of paint flow, pressure control, and colour theory. The artist’s knowledge and skill determine the outcome. AI image generators, by contrast, embody externally developed aesthetic knowledge. The quality of output depends more on the AI’s training data than the user’s artistic understanding.

This distinction has direct relevance for software engineering. When a developer uses an IDE with intelligent autocomplete, they’re leveraging a tool that responds to their architectural decisions and coding patterns. The tool amplifies their existing knowledge. When they use AI to research best practices or explain complex algorithms, they’re gaining insights that inform their decision-making.

The difference becomes clear when we consider how AI can function as a collaborative partner versus a replacement. Recently, I used Claude Code to help create a reusable dialog component for a Nuxt application that needed to display as a bottom sheet on mobile devices and a traditional modal on desktop. The AI suggested providing both programmatic and declarative interfaces, wrapping the functional component within a composable and provider pattern to enable this flexibility. This wasn’t an alien pattern to me, but it was an interesting implementation that offered genuine advantages for component reuse throughout the codebase.

Crucially, I spent considerable time interrogating the design, understanding the trade-offs, and ensuring I could maintain and extend it in the future. The collaboration didn’t save me time in the short term, but I learned new patterns that I’ll apply again. The AI served as a knowledgeable collaborator, suggesting approaches that expanded my thinking whilst leaving me firmly in control of the architectural decisions.

Contrast this with simply using AI to generate entire functions without understanding the underlying logic. In that scenario, you’re incorporating externally developed knowledge that may not align with your project’s specific needs and constraints, whilst missing the learning opportunities that build enduring competence.

When Shortcuts Undermine Growth

The authenticity question isn’t about technological purity but rather about maintaining the connection between skill development and output quality. This connection is what makes improvement possible and meaningful, whether you’re painting miniatures or building software systems.

Consider the learning implications. A junior developer who uses AI to research architectural patterns and debugging techniques builds genuine expertise over time. But one who consistently uses AI to generate complex algorithms may deliver working features whilst missing the deep understanding that comes from wrestling with algorithmic complexity. They’re optimising for immediate output whilst potentially stunting their sustained development. Similarly, a painter who uses AI for lore research and technique suggestions grows in knowledge and capability, whilst one who relies on AI for challenging visual elements achieves better-looking results in the short term but bypasses the struggles that build genuine artistic capability.

The miniature painting community’s concerns aren’t about technological resistance but rather about recognising that the process of creation is inseparable from the development of technical capability. When we optimise purely for output quality, we may inadvertently undermine the human development that makes sustained professional work possible.

This is a lesson I’ve learned repeatedly, both in consulting rooms and at my painting desk. Whether it’s watching a development team lose architectural thinking skills through over-reliance on frameworks, or catching myself reaching for a wash instead of properly learning wet blending techniques, the pattern is the same. Shortcuts that improve immediate results can undermine sustained capability building.

The Skill Development Crisis

Perhaps the most insightful aspect of the miniature painting debate is how it illuminates the relationship between struggle and learning. Painting a convincing sky, mixing colours to match reference material, or creating realistic lighting effects requires years of practice. These challenges aren’t obstacles to overcome but rather the mechanism through which painters develop artistic judgement and technical capability.

Educational research supports this intuition. What American psychologist and educator Carol Dweck calls “desirable difficulties” are productive struggles that require effort and persistence, and they’re essential for building genuine competence. When AI can generate a perfect backdrop instantly, it eliminates these desirable difficulties. A painter who consistently uses AI for challenging elements may achieve better-looking results in the short term whilst stunting their sustained development.

This pattern appears throughout professional technical work, with direct implications for software engineering teams. Consider these scenarios:

-

Code Generation: A developer using AI to generate boilerplate HTTP handlers learns about API patterns and error handling. A developer using AI to generate complex business logic implementations may miss crucial insights about domain modelling and system design.

-

Architecture Planning: Using AI to research architectural patterns and trade-offs can accelerate learning about system design. Using AI to generate complete architecture documents may bypass the deep thinking that produces truly robust designs.

-

Problem-Solving: AI that helps debug by suggesting investigation approaches supports skill development. AI that provides direct solutions without explanation may create dependency whilst limiting learning opportunities.

The key distinction is whether AI usage preserves the “desirable difficulties” that drive professional growth. Teams that use AI to eliminate all struggle may find themselves technically proficient in the short term but strategically vulnerable in the long term.

Community Values Under Pressure

The Golden Demon controversy revealed something fascinating about how communities define and defend their core values. Miniature painting isn’t just about producing beautiful objects but rather about participating in a culture that celebrates patience, skill development, and the satisfaction of overcoming technical challenges.

When AI enters this ecosystem, it doesn’t just offer new capabilities but rather challenges the fundamental value proposition. If the goal is simply to create visually impressive miniatures, AI offers obvious advantages. But if the goal is to develop personal capability, connect with others through shared challenges, and find meaning through the mastery of difficult skills, AI’s role becomes much more complex.

Professional teams face identical pressures. Engineering organisations don’t exist solely to ship code but rather to build sustainable capability, develop people, and create competitive advantages through human expertise. When AI tools optimise for immediate output whilst potentially undermining these broader goals, teams must navigate the same tension between efficiency and development that miniature painters are grappling with.

The most insightful voices in the miniature painting community aren’t advocating for complete AI rejection or uncritical embrace. They’re asking more nuanced questions. How can we integrate powerful new tools whilst preserving the elements of our practice that create sustained value? How do we maintain the learning culture that makes our community vibrant and self-improving?

These questions translate directly to professional contexts:

-

For Engineering Teams: How do we use AI to accelerate development whilst ensuring team members continue building deep technical expertise? How do we leverage AI’s capabilities without creating dependency that limits our ability to solve novel problems?

-

For Design Teams: How do we integrate AI-generated concepts and assets whilst preserving the human insight and user empathy that drives effective design decisions?

-

For Product Teams: How do we use AI for research and analysis whilst maintaining the strategic thinking and market understanding that enables genuine innovation?

The miniature painting community’s ongoing experiment in balancing efficiency with authenticity offers a roadmap for professional teams facing similar choices.

The NaukNauk Effect

The Golden Demon controversy was just the beginning. Another fault line has emerged around apps like NaukNauk, which uses AI to animate static photographs of miniatures and collectibles. The app promises to “bring your photographs to life” with simple AI animation, creating short videos from still images of painted figures.

For many content creators, NaukNauk represents an easy way to make their social media posts more engaging. Why post a static photo of your painted Space Marine when you can make it appear to move, gesture, or even seem to breathe? The app removes the technical barriers to animation, requiring no understanding of keyframes, timing, or the principles that professional animators spend years mastering.

Yet this convenience has created another division within the community. Some painters report being unfollowed or criticised for using AI animation tools, with community members viewing these shortcuts as inauthentic or somehow undermining the craft. The criticism isn’t about the quality of the painting itself but rather about the presentation method and what it represents.

This dynamic reveals something crucial about how AI adoption affects community trust and creator relationships. The issue isn’t technical capability but rather perceived authenticity and effort. When viewers can’t distinguish between content that required hours of animation work and content generated in seconds by AI, it changes how they value all technical content.

As one reviewer noted, “AI is a touchy subject with some people hating on it whenever they get the chance, regardless if you apply the ‘AI generated’ information some social media platforms require”. This highlights how AI adoption creates cultural divides that extend far beyond the immediate users. It’s a dynamic I’ve observed both in client organisations and across various creative communities, even whilst the miniature painting community itself remains remarkably welcoming and supportive of diverse approaches to the hobby.

Professional teams face identical dynamics. When some developers use AI to generate documentation, write test cases, or create presentations whilst others do this work manually, it creates questions about effort, value, and fairness that extend far beyond the immediate output quality. Teams must navigate not just the technical aspects of AI integration, but the social and cultural implications within their organisation.

The NaukNauk phenomenon also illustrates how AI tools can fragment communities around values rather than capabilities. The painters using these apps aren’t necessarily less skilled but rather making different choices about authenticity, effort, and presentation. Many simply find it genuinely exciting to see their carefully painted models come to life, enjoying the technology for the pure joy of watching their static artwork move and breathe. This creates tensions that professional teams must anticipate when rolling out AI tools, as reactions often split between those who see efficiency or delight in new capabilities and those who worry about preserving traditional approaches.

A Spectrum of Integration

Rather than binary acceptance or rejection, the miniature painting community is developing nuanced positions about different types of AI involvement. This emerging framework offers practical guidance for professional teams:

-

Inspiration and Reference: Using AI to generate mood boards, explore colour combinations, or create reference images for painting studies. This preserves human decision-making about how to interpret and implement ideas whilst leveraging AI for ideation. Professional Parallel: Using AI for competitive research, market analysis, or brainstorming sessions where human judgement ultimately guides strategic decisions.

-

Process Enhancement: Using AI for tasks like background removal in photography, or generating multiple lighting scenarios to understand how illumination affects form. The creative interpretation remains human, but tedious preparation work gets automated. Professional Parallel: Using AI for code formatting, test data generation, or documentation templating where the strategic thinking remains human but routine tasks get automated.

-

Learning Acceleration: Using AI to explain colour theory concepts, suggest blending techniques, or provide feedback on work in progress. This treats AI as a teaching assistant rather than a creative partner. Professional Parallel: Using AI to explain complex algorithms, suggest debugging approaches, or provide code review feedback that enhances learning rather than replacing it.

-

Creative Collaboration: Using AI-generated elements as starting points for human modification and interpretation. This might involve generating a basic composition that the artist then extensively reworks and personalises. Professional Parallel: Using AI to generate initial system designs, user interface mockups, or project plans that teams then refine through human expertise and contextual knowledge.

-

Partial Delegation: Using AI for specific sub-tasks whilst maintaining human control over the overall creative vision and execution quality. Professional Parallel: Using AI for specific coding tasks like API integration or data transformation whilst retaining human oversight of architecture and business logic.

-

Full Delegation: Using AI to generate finished elements with minimal human modification. This is where most of the miniature painting community draws its line, viewing it as fundamentally changing the nature of the work. Professional Parallel: Using AI to generate complete features, systems, or deliverables without substantial human review or modification, an approach that raises similar concerns about skill development and quality control.

This spectrum provides a more sophisticated approach than simple “use AI” or “don’t use AI” decisions. Teams can evaluate where on this spectrum different AI applications fall and make informed choices about which level of integration aligns with their goals for human development and output quality.

The Learning and Teaching Disruption

One of the most profound implications of AI adoption, clearly visible in the miniature painting community, is how it changes knowledge transmission. Traditional learning in technical fields relies heavily on observing and emulating the process of skilled practitioners. When advanced painters demonstrate techniques, novices learn not just what to do but how to think about colour, composition, and technical challenges.

AI disrupts this transmission mechanism. When experienced painters use AI for challenging elements, they can no longer fully demonstrate their problem-solving process. The pathway from novice to expert becomes less clear, potentially weakening the community’s ability to develop new talent.

This has direct implications for professional teams:

-

Mentorship Challenges: Senior developers who rely heavily on AI for complex implementations may struggle to teach junior developers the reasoning behind technical decisions. The mentorship process depends on being able to demonstrate thinking patterns, not just final solutions.

-

Knowledge Transfer: When experienced team members use AI for system design or architecture decisions, it becomes harder to transfer the strategic thinking and trade-off evaluation skills to other team members.

-

Institutional Memory: Teams that become dependent on AI for certain types of work may lose the institutional knowledge needed to maintain, debug, or extend those systems when AI assistance isn’t available.

The miniature painting community is experimenting with solutions. Some painters create “process videos” that show their thinking even when using AI tools, whilst others deliberately practice challenging techniques manually to maintain their teaching capability. Professional teams can adopt similar approaches, ensuring that AI usage doesn’t break the chains of knowledge transmission that keep teams capable and adaptive.

The Economics of Technical Work

The miniature painting community’s AI debates also reveal important insights about how AI changes the economics of technical work. When AI can produce a stunning backdrop in seconds, it changes the value proposition of spending hours painting one by hand. This forces a reconsideration of what aspects of professional practice provide genuine value.

For professional teams, similar dynamics apply:

-

Skill Premiums: As AI handles routine tasks, the premium for uniquely human skills increases. Strategic thinking, creative problem-solving, and complex judgement become more valuable. Teams need to identify and invest in capabilities that remain distinctively valuable.

-

Time Allocation: When AI accelerates certain types of work, teams can choose to either increase output volume or invest the saved time in higher-value activities. The choice determines whether AI adoption creates sustainable competitive advantage or just temporary efficiency gains.

-

Quality Standards: As AI raises the baseline quality for certain outputs, it may also raise expectations. Teams may find themselves needing to invest more in distinctively human elements to maintain competitive differentiation.

-

Competitive Dynamics: Early AI adopters gain efficiency advantages, but as adoption becomes widespread, the competitive benefit shifts to teams that integrate AI most thoughtfully whilst preserving human strengths.

The miniature painting community shows how these economic pressures play out in practice. Painters are discovering that as AI handles certain technical challenges, the community increasingly values elements that remain distinctively human. Personal style, creative interpretation, and the visible evidence of skill development over time become more important.

Professional Implications: Building AI Integration Strategies

The miniature painting community’s experience suggests several principles for professional AI integration:

-

Preserve Learning Pathways: Ensure that AI usage doesn’t eliminate the experiences that build sustained capability. If AI handles all complex problem-solving, how will team members develop the expertise to tackle novel challenges?

-

Maintain Human Agency: Keep humans in control of strategic decisions and creative direction. AI should amplify human judgement, not replace it.

-

Value Process as Well as Outcome: Recognise that how work gets done affects team capability, motivation, and sustainable performance. Pure output optimisation may undermine these longer-term factors.

-

Build AI Literacy: Help team members understand how AI tools work and where they’re most appropriately applied. This prevents both over-reliance and unnecessary resistance.

-

Experiment Thoughtfully: Try different integration approaches and evaluate their effects on team dynamics, skill development, and output quality over time.

-

Maintain Capability Independence: Ensure teams retain the ability to function effectively when AI tools aren’t available or appropriate.

-

Consider Community Impact: Think about how AI adoption affects relationships with clients, peers, and the broader professional community.

Looking Forward

As AI capabilities continue advancing rapidly, the challenges identified by the miniature painting community will only intensify. Within the next few years, we can expect AI tools that generate not just static images or simple animations, but complex, interactive content that rivals human-created work across multiple dimensions.

The miniature painting community’s current debates about backdrops and animations may seem quaint compared to future AI that can suggest entire army colour schemes, generate custom transfer designs, or even recommend tactical army compositions based on meta-game analysis. Similarly, the software engineering challenges we face today with code generation and documentation will evolve as AI becomes capable of architecting entire systems, conducting code reviews, or managing deployment pipelines.

Yet the fundamental questions remain constant. How do we preserve the human elements that create sustained value whilst leveraging AI’s expanding capabilities? How do we maintain learning pathways and community connections as AI automates more aspects of technical work? How do we ensure that efficiency gains translate into enhanced human capability rather than dependency?

The miniature painting community’s experience suggests that these challenges are best addressed proactively, before AI capabilities make certain choices irreversible. Communities and organisations that establish clear values and integration principles now will be better positioned to navigate future developments whilst preserving what matters most about human expertise and creativity.

This forward-looking perspective also highlights the importance of viewing AI adoption as an ongoing process rather than a series of discrete decisions. The painters who are most successfully integrating AI tools today are those who regularly reassess their approaches as both technology and community values evolve. Professional teams would benefit from adopting similar adaptive strategies that can evolve with advancing AI capabilities whilst maintaining core human development priorities.

Towards Thoughtful Integration

The miniature painting community’s struggle with AI offers a model for thoughtful technology adoption that professional teams can learn from. Rather than rushing to maximise AI’s capabilities, the most thoughtful painters are asking deeper questions.

What human elements of our practice create the most value, both for individual development and community health? How can we use AI to enhance these elements rather than replace them? Where are the bright lines beyond which AI involvement changes the fundamental nature of our work?

Games Workshop’s eventual ban on AI in Golden Demon competitions wasn’t technological luddism but rather a community defining its values and protecting the elements of practice that make the pursuit meaningful. Professional teams need similar clarity about their non-negotiable human elements.

The most successful AI adoption strategies emerging from the miniature painting community share several characteristics. They preserve human agency in creative decision-making, maintain clear connections between effort and outcome, and support rather than replace the development of human expertise.

For software engineering teams, this suggests a framework for AI adoption decisions:

-

Strategic Integration: Use AI to accelerate routine tasks whilst preserving the complex thinking that drives system design and architectural decisions.

-

Capability Building: Ensure AI usage supports rather than replaces the development of programming expertise, problem-solving skills, and technical judgement.

-

Value Preservation: Maintain the human elements that create sustainable competitive advantage: domain expertise, user understanding, and creative problem-solving.

-

Community Consideration: Think about how AI adoption affects team dynamics, knowledge sharing, and relationships with clients and stakeholders.

The Human Element Remains

The debates raging within the miniature painting community ultimately point to a profound truth about the nature of technical work itself. When we strip away the arguments about AI capabilities and efficiency gains, we’re left with fundamental questions about what makes work meaningful and why people choose technical pursuits in the first place.

For the painters spending countless hours perfecting wet blending techniques or achieving the perfect edge highlight, the satisfaction doesn’t come solely from the finished miniature. It emerges from the process of overcoming challenges, developing capability, and expressing something uniquely human through careful attention and deliberate choice. The community’s resistance to certain AI applications isn’t nostalgia or technophobia but rather an intuitive understanding that some shortcuts fundamentally alter the nature of the journey.

This principle extends far beyond miniature painting into every corner of professional technical work. The software engineer who finds deep satisfaction in solving a complex architectural problem isn’t just delivering a technical solution but rather engaging in a fundamentally human process of understanding, creativity, and growth. The satisfaction of wrestling with a difficult algorithm, the growth that comes from debugging a complex system, the team connections built through collaborative problem-solving… these experiences shape both individual capability and organisational culture in ways that pure output optimisation cannot capture.

As AI becomes increasingly sophisticated, we face a critical choice about how we integrate these capabilities into our professional lives. The temptation to optimise purely for efficiency and output quality is understandable, particularly in competitive environments where speed and volume often determine success. Yet the miniature painting community’s experience suggests that this approach risks undermining the very elements that make technical work sustainable and fulfilling over the long term.

The painters who’ve found the most thoughtful approaches to AI integration share a common characteristic. They’ve remained clear about what they value most in their practice. For some, it’s the meditative quality of careful brushwork. For others, it’s the problem-solving challenge of achieving specific visual effects. For many, it’s the community connection that comes from sharing techniques and celebrating each other’s growth. These core values serve as guideposts for AI adoption decisions, helping distinguish between applications that support their practice and those that would fundamentally alter it.

Professional teams need similar clarity about their non-negotiable human elements. What aspects of your work create the most value for sustained capability building? Which processes generate the insights and connections that drive innovation? Where do team members find meaning and satisfaction that sustains their engagement over time? These questions matter as much as technical considerations when evaluating AI integration strategies.

Some might argue that this approach slows innovation or creates unnecessary friction in competitive environments. However, the miniature painting community’s experience suggests that thoughtful AI integration actually accelerates sustainable growth by preserving the human elements that drive continued learning and adaptation. Teams that maintain their core capabilities whilst leveraging AI assistance are better positioned for sustained success than those who optimise purely for immediate efficiency gains.

The miniature painting community is teaching us that the future of AI in technical work isn’t a zero-sum choice between human capability and artificial intelligence. Instead, it’s about finding integration approaches that amplify human strengths whilst preserving the elements of work that drive growth, meaning, and community. Their experience reveals that the most successful AI adoption strategies maintain human agency in strategic decision-making, preserve pathways for skill development, and support rather than replace the collaborative relationships that make technical communities thrive.

This balanced approach requires rejecting both extremes. We must avoid the uncritical AI adoption that treats all efficiency gains as inherently valuable, whilst also resisting the blanket opposition that dismisses potentially beneficial applications. Instead, it demands the kind of systematic thinking that evaluates each AI application against its impact on human development, community values, and sustained capability building.

In our rush to adopt powerful new tools, we would do well to remember what the painters know. Sometimes the longer path is the only one that gets you where you really want to go. The challenge for professional teams isn’t to resist AI, but to integrate it in ways that preserve and amplify what makes human technical work valuable, sustainable, and deeply satisfying.

The miniature painters are showing us how to hold both efficiency and authenticity, both technological capability and human development. Their experience suggests that with thoughtful integration, we can leverage AI’s power whilst preserving the human elements that make technical work meaningful and communities strong.

This mirrors the broader challenge facing engineering leaders today. The miniature painting community is teaching us that AI adoption, like any significant technological shift, requires systematic thinking about human development, community values, and sustained capability building. These aren’t just technical decisions but rather strategic choices about what kind of organisation you want to become and how you want to compete in an AI-enabled world.

For engineering leaders facing AI adoption decisions, the balanced approach emerging from this unexpected corner of the creative world offers a path forward that maximises both immediate capabilities and sustained human potential. Start by clearly defining what human elements create the most value in your organisation, experiment with AI applications that enhance rather than replace these capabilities, and establish feedback mechanisms that help you course-correct as both technology and team needs evolve. The frameworks developed by painters wrestling with brushes and algorithms may well prove essential for teams navigating the future of technical work.

About the Author

Tim Huegdon is the founder of Wyrd Technology, a consultancy that helps engineering teams achieve operational excellence through structured communication frameworks and process improvement. With over 25 years of experience in software engineering and technical leadership, Tim specialises in helping organisations navigate the complexities of AI adoption whilst preserving the human elements that create sustainable competitive advantage. He guides engineering leaders in implementing thoughtful AI integration strategies that emphasise human-AI collaboration over replacement, systematic approaches to technology adoption that support both efficiency and capability building, and the frameworks necessary to balance immediate productivity gains with long-term team development during periods of rapid technological change.